The essential guide

There are several topics I am closely following and paying attention to for the creation of an Agent. Specifically, we have two:

1- Writing effective instructions (Declarative Agent – Microsoft 365 Copilot Extensibility).

2-Optimizing prompts and topic configuration (Copilot Studio).

Regardless of which tool we will use, it is good practice to follow a well-defined path.

Let’s start addressing the first of the two points (we will cover the second in another article).

Writing effective instructions:

Instruction components:

A well-structured set of instructions ensures that the Agent understands its role and tasks and how to interact with users.

Proceeding step by step, here are the 3 components of the instructions:

- Purpose: that is, the goal.

- Guidelines: general guidelines, tone, and restrictions.

- Skills: the abilities.

Instructions can also include:

- Step-by-step instructions.

- Error handling and limitations.

- Feedback and iteration.

- Iteration examples.

- Non-standard conditions.

- Follow-up and closing.

The rule is always and only one: “The more precise we are, the better our agent will work.”

Purpose:

Let’s start by defining the role and area of expertise, including the primary function it performs.

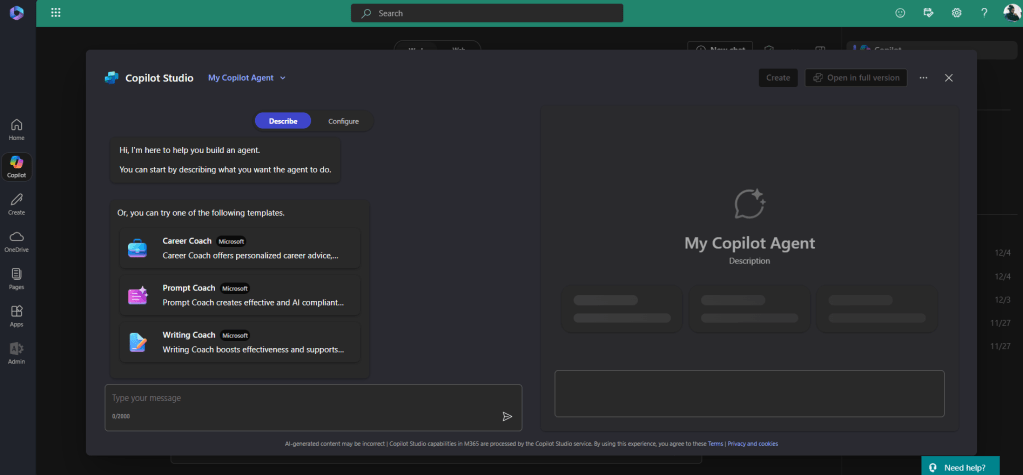

I am on the Copilot M365 screen.

As you can see, the creation process is directly assisted by Copilot, which asks for information.

First, we enter the description.

“You are the agent that helps users plan and track project schedules.”

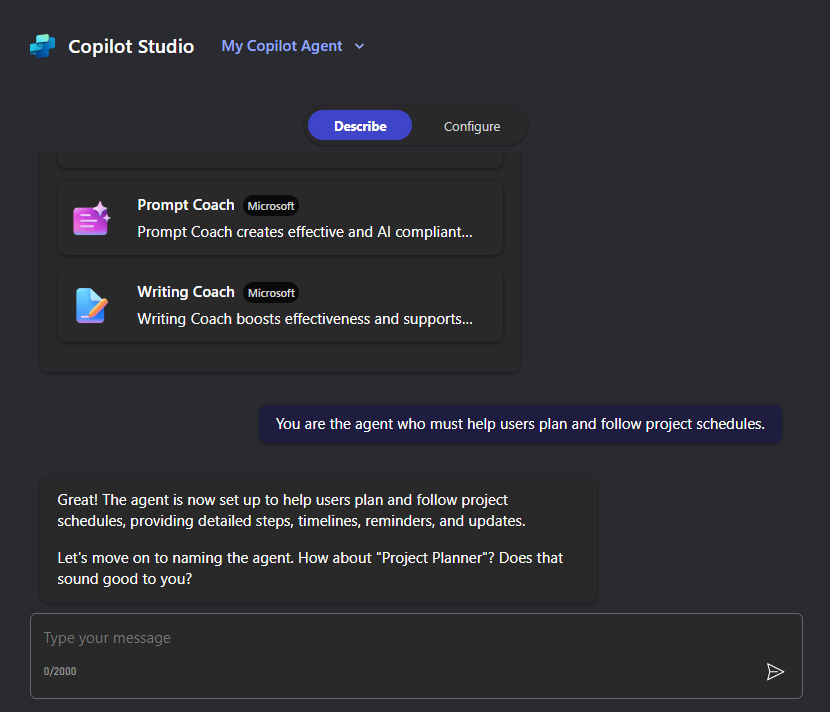

I am asked for the name and finally the instructions.

My Agent is called “Agent for Planning.”

decide to include part of the description written earlier but will add parts that can better direct the purpose.

“You are the agent that helps users plan and track project schedules. You must support users to make their work better and more efficient.”

Let’s keep what we have written for the instructions. We will add more shortly.

Guidelines:

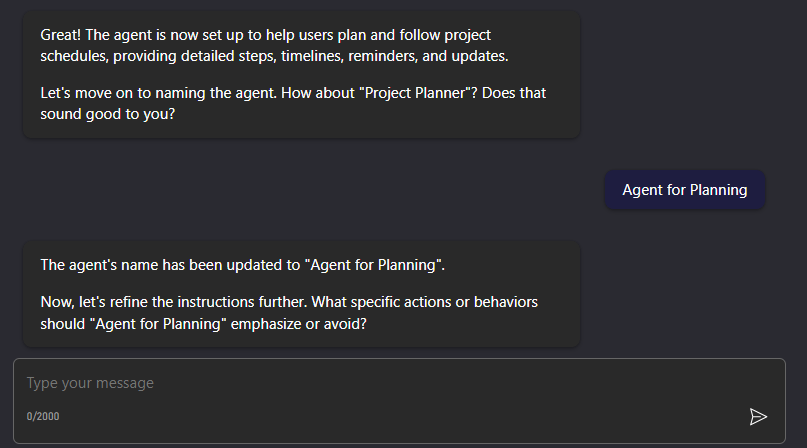

Now let’s define how the agent should communicate, for example:

“Maintain a friendly and precise tone during interactions.”

Skills:

Last but not least, we define a list of tasks that the Agent is expected to perform. These can include:

- Generating suggestions.

- Automating tasks.

- Providing explanations.

- And much more

“When a complex question or request is posed, break down concepts and simplify the various topics for the user. Provide explanations about the projects.”

At this point, we have much more precise instructions.

“You are the agent that helps users plan and track project schedules. You must support users to make their work better and more efficient. Maintain a friendly and precise tone during interactions. When a complex question or request is posed, break down concepts and simplify the various topics for the user. Provide explanations about the projects.”

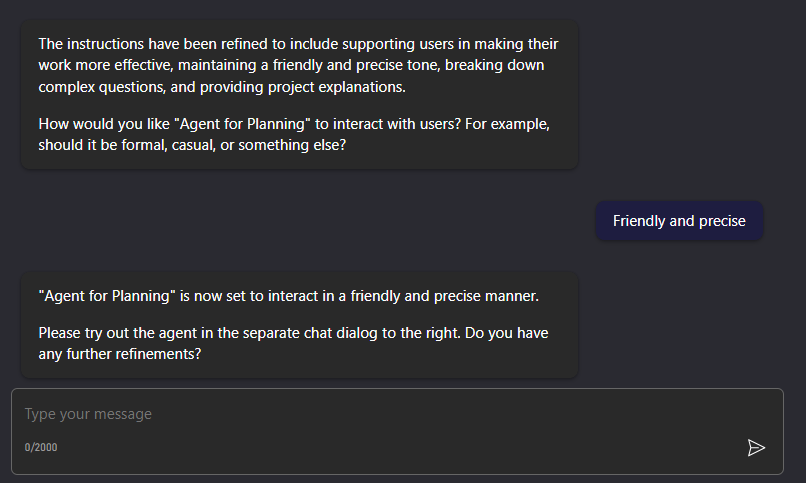

Let’s try to write what we have decided so far.

As we can see, Copilot is proactive and asks for further information.

Indeed, I could further refine the focus.

Step-by-step instructions:

I can define the workflow structure and outline the order in which to complete tasks.

“Follow these steps:

- Ask for the user’s name.

- Ask specifically what they need.

- Identify the topic.

- Help the user simplify the steps of project development.

- Ask the user if they need to create a draft of what has been done so far.”

I can handle errors and any limitations.

“If you encounter a situation where you cannot proceed, respond with ‘I need more information to assist you. Could you clarify?’”

Feedback & iteration:

But that’s not all… I decide to include the following instructions:

“After providing a suggestion, ask if it meets my needs or if modifications are necessary.

If I provide feedback, adjust your response or propose alternative solutions.

Continue to refine your suggestions based on my input until I am satisfied.”

There are still a couple of points I can manage. I will not handle them in this demo, but it is useful to know what we are talking about.

Non-standard terms:

It is useful to define any specific non-standard or unique terms for the organization in the instructions. This ensures that the agent understands and correctly uses these terms in its responses. Providing a glossary of such terms can be beneficial for both the agent and users. Term definitions can be part of general instructions or a specific skills description.

Follow-up and closing:

Ask the agent to remind users that it is available for further assistance and offer help with related tasks. Also, teach the agent to properly close a session, summarizing the results or suggesting next steps.

Best practice:

It is important to keep the agent’s instructions structured and follow an iterative process to test and improve the instructions until the agent provides satisfactory results.

- Be specific.

- Break tasks into smaller steps.

- Use clear syntax.

- Use examples.

- Use “Do” and not “Do Not.”

- Add “Knowledge.”

Demo:

It’s time to test our Agent.

“Hello! I have a project for a client where I need to manage 4 topics:

1- Migrating data sources, external to Microsoft, to Fabric. I want to centralize all data in OneLake for greater security and without quantity limits when the tables have tens of millions of data.

2- I will use Dataflow Gen2 to import the data.

3- I need to schedule migration activities using Microsoft Data Pipeline.

4- I will need to create the necessary reports. A report representing the client’s revenue. A report representing the products in stock.

Help me plan everything.”

Here is my agent’s response:

“Hello Paolo! It sounds like a very interesting and complex project. To help you plan everything, we can break down the project into several phases and activities. Here is a proposed plan:

Phase 1: Planning and Preparation

1-Data source analysis: Identify all external data sources to Microsoft that need to be migrated.

2-Requirements definition: Establish security and quantity requirements for data to be centralized in OneLake.

3-Resource planning: Assign the necessary resources for data migration and import.

Phase 2: Data Source Migration

1-OneLake configuration: Set up OneLake to centralize data.

2-Data migration: Use Dataflow Gen2 to import data from external sources to OneLake.

3-Migrated data verification: Ensure that data has been migrated correctly and meets security requirements.

Phase 3: Scheduling Migration Activities

1-Creating the Data Pipeline: Use Microsoft Data Pipeline to schedule migration activities.

2-Monitoring and management: Monitor the progress of migration activities and manage any issues.

Phase 4: Creating Reports

1-Report definition: Establish requirements for revenue and inventory reports.

2-Report development: Create reports using appropriate reporting tools.

3-Verification and validation: Ensure that reports are accurate and meet client requirements.

Phase 5: Delivery and Support

1-Report delivery: Present reports to the client.

2-Training and support: Provide training and support to the client on using new reports and the centralized system.”

I recommend drafting this plan and adapting it to your client’s specific needs. If you need further details or assistance on a specific point, let me know!”

I also ask for another piece of information:

“How can I use the Dataflow Gen2 tool to make it as efficient as possible?”

In this case, the interesting thing is that our Agent searches for information by pointing to the web.

“To use Dataflow Gen2 effectively, there are some best practices you can follow:

Separation of data sources: Create a separate dataflow for each type of source, such as on-premises, cloud, SQL Server, Spark, and Dynamics 365. This facilitates troubleshooting and avoids internal limits during dataflow refresh.

Using calculated tables: Calculated tables can improve dataflow performance by up to 25 times. Use the calculation engine to improve performance.

Dataflow backups: Deleted dataflows cannot be recovered, so it’s important to regularly back them up. You can enable Azure storage connection in your Power BI workspace to save a copy of the dataflow definition and snapshots in a data lake.

Developing complex dataflows: Follow patterns and guidelines to develop large, performant dataflows. This includes creating complex business logic and implementing it on a large scale.

Reusing dataflows: Reuse dataflows in different environments and workspaces to optimize the data preparation process.

Monitoring and management: Use tools like Power Automate or Azure Logic Apps to export the dataflow definition to a JSON file and store it in SharePoint or Azure Data Lake Gen2. This allows you to automate the backup process.”

By following these best practices, you can make the most of Dataflow Gen2’s capabilities and ensure an efficient and secure data migration process.

If you need further details or assistance on a specific point, let me know!”

With links included!

Boom Done 💣

Leave a comment