How intelligent is artificial intelligence?

The quantity of AI models has truly exploded, and the question I asked myself is: “How can I measure how intelligent artificial intelligence really is?”

In the ever-evolving world of artificial intelligence, choosing the right model can make the difference between the success and failure of a project. Today, we’ll explore the main AI models available, analyzing their features, strengths, and ideal use cases.

I’ve narrowed down the options for obvious reasons.

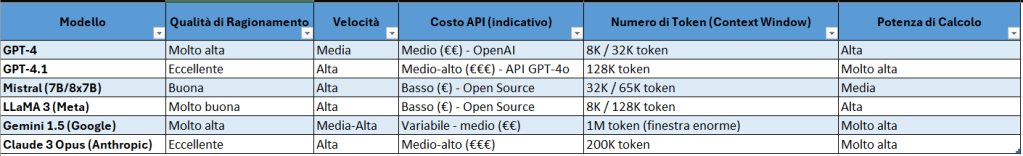

Models

- GPT-4

- GPT-4.1

- Mistral (7B/8x7B)

- LLaMA 3 (Meta)

- Gemini 1.5 (Google)

- Claude 3 Opus (Anthropic)

Comparison Parameters

To get a clear overview, I used the following parameters:

- Quality of reasoning

- Speed

- API cost (indicative)

- Number of tokens (Context Window)

- Computational power

Quality of Reasoning

The quality of reasoning in an AI model indicates how well the model connects information and follows logical steps to arrive at a correct and coherent answer.

GPT-4.1 and Claude 3 Opus are currently the top models for advanced reasoning, semantic understanding, and context. They are useful in fields like law, medicine, consulting, and project management.

Gemini 1.5 excels at maintaining context thanks to its enormous window (up to 1 million tokens), making it excellent for lengthy documents and complex analysis.

LLaMA 3 and Mistral have good quality but remain weaker in abstract reasoning and complex operations compared to larger closed-source models.

Speed

The speed of an AI model indicates how quickly it can provide a response or complete a task after receiving input.

Mistral, LLaMA 3, and Claude are generally faster in delivering responses compared to GPTs, partly due to lower overhead in their deployments.

GPT-4.1 has greatly improved speed compared to the classic GPT-4, especially in the “GPT-4o” (optimized) version.

Gemini is slightly slower, especially when using large contexts, but compensates with quality.

API Cost

The API cost of an AI model refers to what you pay for each call or API usage, based on factors like the number of tokens processed, calculation time, or resources used.

Open-source models (Mistral, LLaMA 3) are very affordable or even free when self-hosted (but require hardware resources).

Claude, GPT-4.1, and Gemini are commercial models with paid APIs. They are more expensive but offer high-level performance and greater reliability.

Actual costs depend on factors like:

- Number of tokens used

- Frequency of requests

- Degree of parallelization

Number of Tokens (Context Window)

The number of tokens indicates how many units of text (words or parts of words) the model can process in a prompt and response, affecting costs and performance.

Gemini (1M) and Claude (200K) are unbeatable for analyzing entire books, large databases, or lengthy conversations.

GPT-4.1 (128K) offers an excellent balance between context capacity and response quality.

Open-source is often limited to 32K–65K, but sufficient for most common use cases.

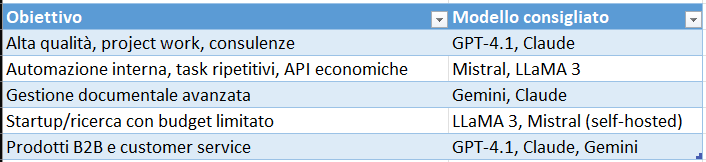

Strategic Adoption Considerations

We can reason about the above indicators accordingly.

If you need deep reasoning and broad context, Claude 3 and GPT-4.1 are the best.

If you need flexibility, low cost, and self-hosting, go with LLaMA 3 or Mistral.

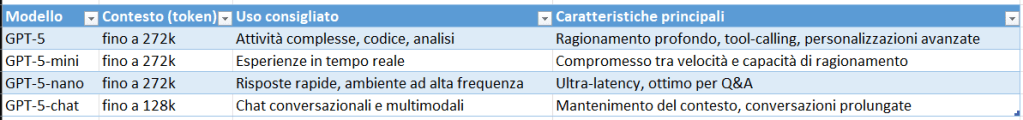

GPT-5

GPT-5 Model Features in Azure AI Foundry

OpenAI just released GPT-5 a few days ago, you can read my article here:

Let’s go into a bit more detail about the available features:

- GPT-5 (full reasoning)

- The full and most powerful model, designed for deep reasoning, complex analysis, and sophisticated code generation, with extended context up to 272k.

- Provides advanced features like reasoned planning (preamble), parameters such as reasoning_effort (from minimal to high), verbosity, support for parallel tool calls, and multimodal input.

- Ideal for projects that require high accuracy and transparent explanations of the reasoning process.

- 2. GPT-5-mini

- A slimmer variant, designed for real-time experiences, with light but effective reasoning and the ability to activate features (tool-calling).

- Same context capabilities as GPT‑5 (up to 272k), but focused on speed and efficiency.

- 3. GPT-5-nano

- Built for ultra-low latency and rapidity, maintaining good Q&A abilities.

- Perfect for applications with high request volumes or environments sensitive to response times.

- 4. GPT-5-chat

- Optimized for natural, multi-turn, and multimodal conversations, with a context window of 128k tokens.

- Ideal for chatbots or corporate digital assistants that must maintain context and coherence across long, complex conversations.

All GPT‑5 models are orchestrated via a model router, which automatically picks the most suitable variant depending on task complexity, performance needs, and cost. This way, you don’t have to make manual selections, improving efficiency and ease of use.

Main Features of GPT-5

Faster and more efficient reasoning

GPT-5 provides concrete reasoning with less token consumption (saving 50–80% compared to o3), improving performance and computational efficiency.

Lower rate of hallucinations and greater reliability

About 20% fewer factual errors than GPT-4o during production responses.

In reasoning mode, a 70% drop in errors compared to o3.

In multimodal scenarios (e.g., missing images), GPT-5 avoids misleading answers in 91% of cases, versus 86.7% for o3.

Extended context: up to 1 million tokens

GPT-5 supports extremely long context windows, ideal for complex documents, code, contracts, research, and conversational archiving.

Advanced multimodality

Processes text, images, audio, and video in real time—integrating these modes for richer, contextual understanding.

Persistent memory and real-time learning

Introduces durable memory that keeps preferences and context between sessions. Some versions include learning through user feedback within controlled limits.

Integration and agentic capability

GPT-5 can autonomously use tools (e.g., browser, diagram generation, code execution) to respond in a single interaction.

It can create apps, sites, games, connect to tools like Gmail and Google Calendar, operating almost as an autonomous assistant.

The next generation AI is already here: explore, experiment, and take your innovation beyond platforms—the future awaits you.

And you, how will you seize these opportunities?

Thanks to Alessia Ramsamy for collaborating as co-author of all content.

Boom, done 💣!

Follow me:

Leave a comment