Evaluating agents with Microsoft Copilot Studio: the decisive step towards excellence

In a world where conversational automation is increasingly central, the real challenge is not only creating intelligent agents, but measuring their effectiveness. Without accurate evaluation, every improvement risks being blind. This is where the Agent Evaluation feature of Microsoft Copilot Studio comes into play.

The problem: how can you tell if your agent is really working?

Many teams deploy chatbots and virtual assistants without concrete tools to analyse performance. The result? Inconsistent user experiences, vague metrics and difficulty justifying investments. A structured approach is needed to turn data into insight.

The solution: create targeted evaluations

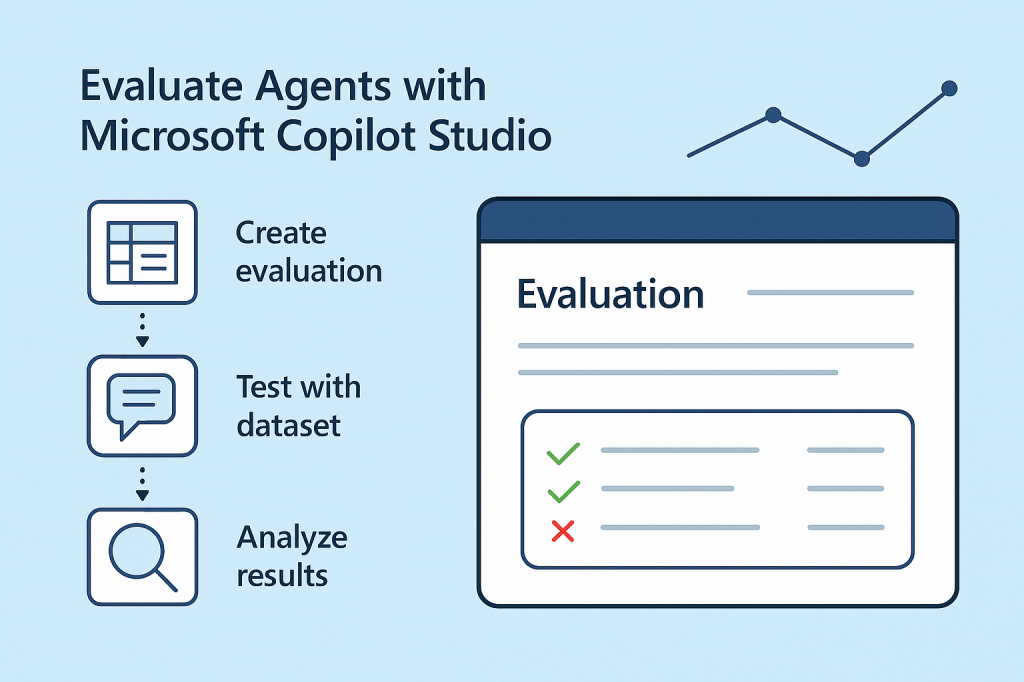

Copilot Studio introduces an evaluation system based on real scenarios, which allows you to:

- Define clear success criteria.

- Create test datasets to simulate interactions.

- Run automatic evaluations with objective metrics.

- Analyse results to identify strengths and areas for improvement.

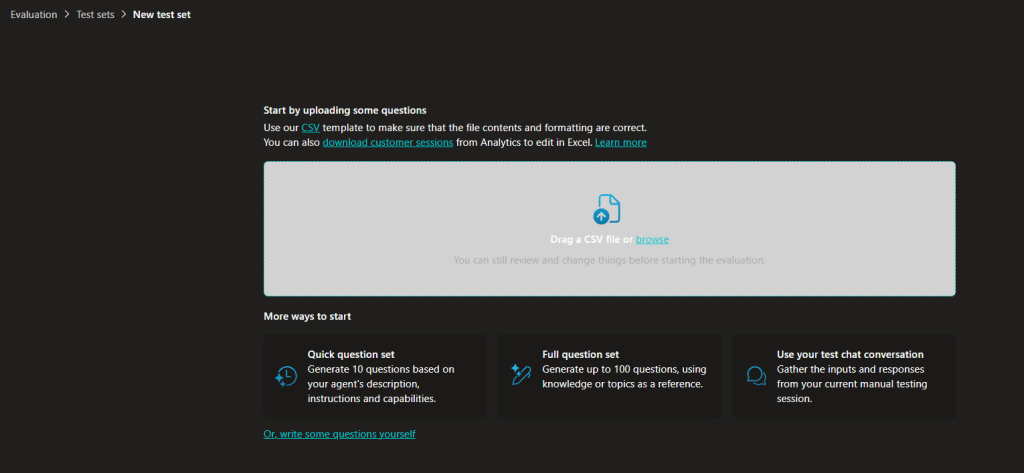

The process is simple: start by creating an evaluation, select the reference conversations and set parameters such as accuracy, completeness and tone. All managed directly from the platform, without added complexity.

The benefits for your business

- Data-driven decisions: no more guesswork, just evidence.

- Continuous optimisation: improve your agent iteratively.

- Superior user experience: more relevant and natural responses.

- Cost reduction: fewer errors, greater efficiency.

A broader perspective

This feature is not just a technical tool: it’s a paradigm shift. It means moving from a “create and release” logic to one of constant monitoring and improvement, in line with responsible AI best practices.

Evaluating an agent is not optional: it is the key to transforming a virtual assistant into a true strategic ally. With Copilot Studio, you have the tools to do this simply, safely and at scale.

Boom, done 💣!

Follow me:

Leave a comment