I have been thinking about writing this article for a few months, but the speed of change the whole AI world is subjected to certainly doesn’t help.

I have tried to set some fixed points regarding the timescales for AI education and literacy. I believe there is a need to bridge the gap between practical use and deep understanding, because it is pointless to have a Ferrari and not know what the differences are with other cars, how it “behaves”, how it should be used and, above all, how powerful it is and how to exploit it to our advantage.

Let’s start with the basics…

Today, AI is present in so many aspects of our lives: from medicine to schools, from social networks to self-driving cars.

Whether we like it or not, AI is everywhere—or almost!

Think about web searches, text translations, ordering a product on an e-commerce site or analysing company data.

That is why it is essential to learn about it, to understand its strengths and limitations, and to know how to use it responsibly.

Why educating about AI today is essential

- AI is no longer just a technical topic but a transversal skill , like English or critical thinking.

- People will interact with intelligent systems in every process: productivity, creativity, data analysis, decision-making.

- Without a cultural foundation, there is a risk of relying on AI uncritically, or, on the contrary, rejecting it out of distrust.

AI Education: Which skills are really needed?

Cognitive skills

- Critical thinking

- Source evaluation

- Model logic

Operational skills

- Prompt engineering

- Use of AI tools in workflows

- Integration with automations

Ethical skills

- Awareness of bias*

- Privacy and security

- Knowledge of system limitations

*Bias : or distortion, is a systematic and involuntary inclination that deviates judgement from rationality, creating a subjective and distorted perception of reality.

AI Literacy ≠ Knowing How to Use AI

Using an AI tool is different from understanding how it works.

- A new form of literacy is needed, which includes:

- fundamentals (what is a language model, what is training)

- limitations and biases

- interpretation of results

- responsibility in use

Being literate in AI means being able to distinguish between what a machine can really do and what is still science fiction.

It also means knowing the risks, such as privacy and security, and learning to ask critical questions about how data is used.

Starting with small steps is the best way: getting informed, experimenting with available AI technologies and sharing knowledge with others.

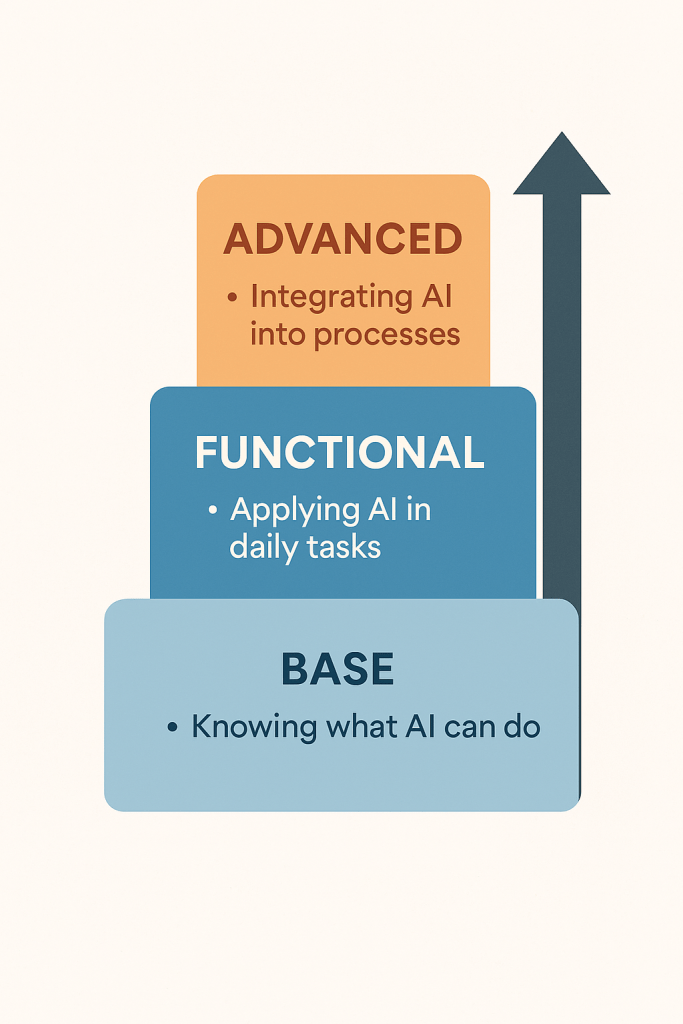

I believe AI literacy should be divided into 3 levels:

- Basic Literacy

- Knowing what an LLM is, what “probabilistic” means, what it can and cannot do.

- Functional Literacy

- Knowing how to use AI with critical sense in daily tasks.

- Advanced Literacy

- Integrating AI into processes, automations, analytical models and strategic decisions.

The Role of AI in Learning

For the first time in history, technology can actively help us in the learning process . AI, in fact, becomes:

- a personalised tutor

- creative partner

- editor

- research assistant

But this implies new educational responsibilities: how to avoid cognitive shortcuts, dependency or loss of critical skills?

Moreover, cultural rules are needed, not “just” technical ones. This is not just about developers or industry professionals:

“Technical discussions on bias, datasets and parameters are useless if there isn’t a culture of responsible use.”

Schools, like companies, must invest in conscious and competent training, and teaching AI leads to education in its deeper and fairer use.

I have imagined different “levels” and written some examples about them:

Primary school (6–10 years old)

Goal: curiosity, concreteness, zero technical jargon

Method: play, discovery, analogies.

Practical examples

Stories generated and corrected

The AI tells a story and the children look for mistakes.

→ Lesson: AI can make errors.

Game: “Who is the AI?”

Children ask questions to a voice assistant and discover what it can and cannot do.

→ Lesson: AI gives answers, but it doesn’t think like a person.

Drawings “made together with AI”

Children create a drawing and then ask the AI to transform it into a different style.

→ Lesson: AI is a creative tool, not a replacement.

Lower secondary school (ages 11–13)

Goal: developing critical thinking

Method: challenges, comparing answers, discussing mistakes.

Practical examples

- Comparing two AI answers

Students ask the same question to two different tools and compare the results.

→ Lesson: AI is neither uniform nor objective. - Mini‑project: creating a guide for responsible AI use

Students define rules for using AI in schoolwork.

→ Lesson: ethics and responsibility. - Workshop on “weird” content

The teacher shows an obviously wrong answer.

→ Lesson: AI can be confidently wrong.

High Schools (Ages 14–18)

Goal: operational competence and understanding of limitations.

Method: real-world examples, case studies, guided use.

Practical Examples

- “Prompt Challenge” Project

Students compete by creating the best prompt for a given task.

→ Learning outcome: precision and strategy in making requests. - Bias Workshop

Example: generate faces or descriptions and discuss distortions.

→ Learning outcome: recognizing discrimination and stereotypes. - AI as a Personalized Tutor

The AI explains a difficult concept, then the student verifies it with the class.

→ Learning outcome: using AI as support, not a shortcut.

University

Goal: analytical thinking, design skills, real-world cases.

Method: research, prototyping, critical analysis.

Practical examples

- Designing AI workflows

Students outline a process (e.g., data analysis) and decide where to integrate AI.

→ Lesson: thoughtful and intentional integration. - Hallucination analysis

Students study false answers and understand their causes.

→ Lesson: the probabilistic nature of AI models. - Applied ethics

Discussions on real cases (hiring, predictive justice, healthcare).

→ Lesson: social and decision-making impact.

Companies – operational workers

Goal: responsible productivity.

Method: examples of daily tasks that can be automated.

Practical examples

- Writing emails / summaries / reports with AI

Real examples taken from daily work.

→ Lesson: AI speeds up work, but outputs must be verified. - Workbook: “Allowed uses / forbidden uses”

A checklist of what to ask and what to avoid.

→ Lesson: safety, privacy, limitations. - Mini verification exercises

AI produces a document, and the employee corrects it.

→ Lesson: human supervision.

Companies – managers and decision makers

Goal: strategy, governance, decision quality.

Method: decision-making simulations and case studies.

Practical examples

- Simulation: “AI proposes a decision — what do you do?”

Example: a model suggests sales priorities.

The manager must validate, request data, or challenge it.

→ Lesson: AI as an advisor, not a judge. - Building the company AI policy

Managers decide rules on data, privacy, and auditing.

→ Lesson: governance. - AI roadmap

Analysis of value areas, risks, and social impacts.

→ Lesson: sustainable strategy.

Older adults / citizens

Goal: autonomy, digital safety, reducing the technology gap.

Method: practical demonstrations, zero technical jargon.

Practical examples

- How to recognize an AI‑generated scam message

→ Lesson: online safety. - Voice assistants as daily support

Recipes, reminders, reading texts.

→ Lesson: AI as a practical life helper. - Mini workshop on identifying fake news

The AI shows a fake news example, and the group debunks it together.

→ Lesson: critical thinking.

Citizens in general

Goal: awareness and basic competence for everyone.

Method: everyday examples.

Practical examples

- “Where do we encounter AI every day?”

Spotify, Google Maps, e‑commerce, banking, translations.

→ Lesson: AI is already part of daily life. - FAQ on real risks vs myths

Examples:- “Does AI steal jobs?” → No, it changes tasks.

- “Is AI alive?” → No.

- “Does AI see everything?” → It depends on the data.

- Introduction to digital critical thinking

How to filter, verify, and compare information.

AI as an Enabler of Inclusion

This is a sensitive and fundamentally important topic.

AI can break down cognitive, linguistic and cultural barriers, adapt teaching to different paces and make knowledge accessible to people with disabilities.

Some examples:

- Screen readers for dyslexia

- Real-time translation for non-native students

- Assistive technologies for people with motor disabilities

Why all this?

I experienced the advent of the web many years ago and those fears were very similar to those we experience today with AI: loss of control, privacy risks, digital exclusion and changes in work.

Even then, the solution was digital literacy, which made it possible to turn fears into opportunities.

Today, as then, with AI, the same approach is needed: education, critical awareness and ongoing training, to guide change rather than be subjected to it.

Boom, done 💣!

Follow me:

Leave a comment